Implications for Bicyclists and Pedestrians

Will autonomous cars and automatic braking systems live up to their promise to improve public safety? Or will a rush to market flawed technology create greater peril for pedestrians and bicyclists?

by Steven Goodridge, Ph.D.

As I pedaled my bike along the road, Mercedes driver Farhan Ghanty approached me from behind at 45 mph, engaged the self-drive function of the E-Class luxury car, and took his hands off the wheel.

It was December 4, 2017; BikeWalk NC Director Lisa Riegel and I were attending an autonomous vehicle demonstration held for the NC House of Representatives at the North Carolina State Highway Patrol test track in Raleigh. Semi-autonomous and fully autonomous vehicles from over a dozen manufacturers including Tesla, Mercedes, and Cadillac carried House members around the track as industry experts lobbied in support of legislation to support autonomous vehicles in the state. With semi-autonomous “driver-assist” systems on the road today, and fully autonomous vehicles being deployed for real-world testing, the question of how state laws that address the responsibilities and liabilities of “drivers” should treat automated systems could no longer be postponed. But BikeWalk NC had a different question: How well do these systems really behave around pedestrians and bicyclists?

Walking from car to car, I asked the manufacturer reps to describe the driver-assist functions and sensors featured on each vehicle. The products varied widely, from lane-keeping assist systems to semi-autonomous hands-free driving, as did the sensors, which included monocular and stereo cameras, radar, and lidar. Some of the manufacturers were eager to tout the pedestrian detection and automatic braking capabilities of their vehicles, while others explained that their cars were not designed to detect people. This much I expected from my research into the industry over the last few years, but it was novel to have the opportunity to compare the products side-by-side in person.

When Brand Specialist Farhan Ghanty expressed his confidence in the ability of the Mercedes E-Class Drive Pilot to detect and slow for a bicyclist on the road ahead, I couldn’t resist.

“I brought my bike. Can we test that right here, right now?”

Farhan agreed. We told the Highway Patrol officers what we were up to, and I pedaled out onto the track. Farhan started his test lap with witnesses in his car, including a member of the press. He approached me from behind at 45 mph with the self-driving mode engaged and his foot over the brake pedal as a precaution. A video drone recorded the event from overhead.

The test result was drama-free. According to an indicator on the dashboard, the car’s stereo cameras and radar sensors detected me from a long distance away and the car slowed gradually, well before it needed to. It matched my speed and followed for a short time before I turned off the track.

This was a softball test for a self-driving car: Broad daylight, open road, no other vehicles or clutter, and a fully attentive driver to take over if needed. In the real world, self-driving cars have not always fared so well. Two Tesla vehicles have been involved in high profile crashes where their self-driving systems failed to avoid what appeared to be completely avoidable crashes: one in 2016 involving a left-turning truck, and one last week involving a stopped fire engine. If Tesla’s premier “Autopilot” system cannot see or brake for large trucks reliably, what does that imply for smaller and more vulnerable pedestrians and bicyclists?

When it comes to automated driving and braking systems, one thing is clear: All products are not created equal. Investigation of the state of the art and numerous test results reveals that some technologies do an extraordinary job of avoiding collisions with pedestrians and cyclists, while others are practically blind to them. Some self-driving systems are intended for use only on freeways devoid of pedestrians and bicyclists, yet car owners are activating them on ordinary streets. Bike/ped advocates have a vested interest in promoting effective regulations for such vehicles. For safety advocates to know what regulations to support, it’s useful to understand how the technology works and why some systems fail more than others.

Sensors

Autonomous vehicles use sensors to detect, classify, and/or localize pedestrians and bicyclists in the street environment. Some types of sensors reliably detect that something significant is in the road but may have difficulty telling what the object is. Other sensors can classify objects into separate categories such as pedestrians and bicyclists, but only after they have been detected by other means, or under good observing conditions. Classification is useful in predicting the likely movement of objects in the streetscape because pedestrians, bicyclists, and garbage cans behave differently. Finally, the ability of a sensor to accurately localize and track the position of a pedestrian or bicyclist in three-dimensional space is essential for the driving system to determine whether it needs to yield by braking or changing lanes.

Camera Sensors: Stereo Vision

A pair of forward-looking cameras can provide an autonomous system with three-dimensional depth perception of the environment ahead. Video-processing algorithms determine how features such as edges and textures correspond between the two camera images; the parallax between these features is then used to estimate a range for every pixel location in the scene. Pixel-level range resolution provides a precise measurement of the angle to an object, while the accuracy of the range estimate decreases with distance. Stereo vision systems can be very effective at detecting and localizing visible, human-sized objects in the streetscape regardless of the ability to classify them. If a human-sized object is detected in the middle of the roadway, an autonomous system can be programmed to avoid driving into it regardless of classification. The main limitation of stereo vision systems is the varying degree of object visibility at distance under different lighting, weather, and reflectance conditions.

Camera Sensors: Object Recognition

Many camera-equipped systems use pattern-recognition algorithms to classify objects as road signs, traffic barrels, cars, trucks, motorcycles, bicyclists, and pedestrians. The fine pixel resolution of an image enables precise angular localization of recognized objects. Range may be estimated accurately from stereo disparity if two cameras are used, otherwise a monocular system must estimate range roughly from expected object size or displacement from the horizon.

Recent advances in machine-learning techniques have made object-classification systems much more effective than they were just a few years ago. Deep neural networks – mathematical functions with many layers of nodes which resemble the connectivity of brain neurons – are now practical to train thanks to clever new algorithms and the availability of “big data” image sets. The heavy mathematics can now be applied to every pixel in a video stream in real time thanks to miniature supercomputers comprised of inexpensive graphics processing units (GPUs) originally developed for PCs used by gamers.

The nearly infinite variety of human appearance, clothing and body pose makes it challenging for pattern-recognition algorithms to extract people reliably from cluttered, real-world backgrounds. If the vision algorithm is too permissive, it can falsely classify background clutter and shadows as people, which could result in unnecessary and potentially hazardous emergency braking. Conversely, if the computer-vision algorithm fails to recognize a real person or vehicle in a scene, the driving system is blind unless a redundant sensing mode can detect the potential for collision. In 2017, IEEE Spectrum reported that algorithms used by computer-vision and autonomous vehicle researchers were able to recognize bicyclists in isolated 2D images only 74% of the time. Today, computer-vision algorithms are still limited enough that autonomous vehicles can’t drive on video alone, especially with just a monocular camera.

Automotive Radar

Radar systems actively sense range to objects by transmitting a continuously varying radio signal and measuring reflections from the environment. A computer compares the incoming and outgoing signals to estimate both the distance and relative approach velocity of objects with high accuracy. Determining the angle to objects is more challenging. Instead of using a rotating antenna like an airport radar, automotive radar systems scan across the sensing area by using an array of electronically steered antennas. Beamforming is a technique used to aim the effective direction of a radar beam by changing the delay between different transmitting antennas so that the signals add constructively in a specified direction. Multiple receiving antennas allow further angular refinement. However, the resulting radar beam is still wide enough that the resulting angular resolution is limited. Most radar systems are insufficient for making decisions that require precise lateral localization, such as whether there is enough room to pass a bicyclist or pedestrian ahead on the roadway edge without changing lanes.

Pedestrian detection and classification with radar can be enhanced through the use of micro-doppler signal analysis. The movement of a pedestrian’s arms and legs while walking provides a unique time-varying doppler signature that can be detected in the radar signal by a pattern-recognition algorithm. This signature allows the radar system to distinguish pedestrians from other objects and to start tracking their movement sooner and among clutter, such as when a pedestrian is stepping out from between parked cars. Bicyclists present more of a challenge to distinguish from clutter because they move their body less, especially when coasting.

Sensor Fusion

The accuracy of object detection, classification and localization is improved by combining data from complementary sensors. Cameras provide good angular resolution while radar provides good range precision; vision algorithms can classify visible objects but radar can detect obstacles in darkness and glare. Volvo has used this combination for pedestrian and bicyclist collision avoidance since 2013. Ford Motor Company’s recent marketing campaign for the 2018 Mustang has highlighted Fords’s use of radar/video sensor fusion to enhance the vehicle’s automatic emergency braking performance, particularly for pedestrians and at night.

Lidar

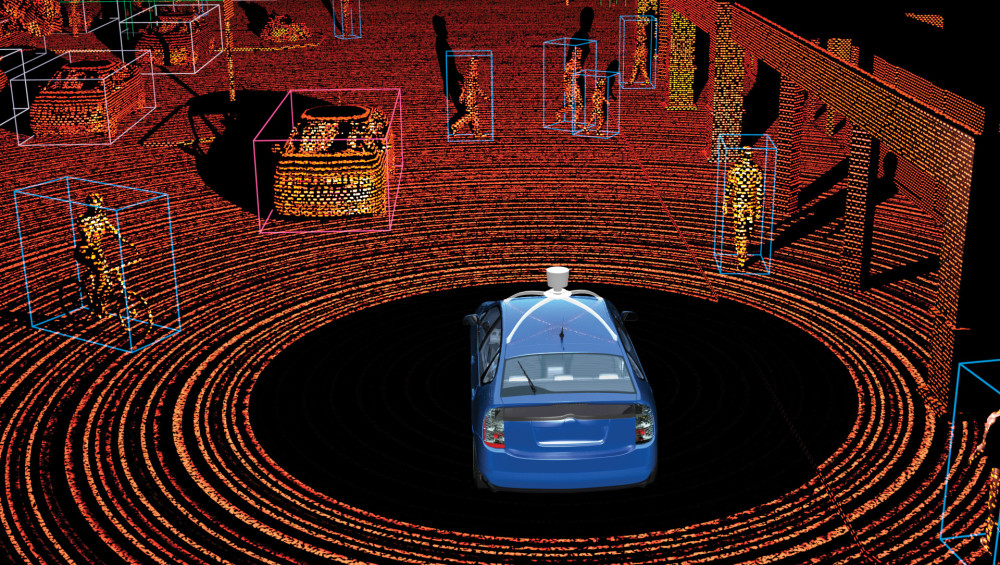

Lidar uses rapidly scanning infrared laser beams to generate a 3D point cloud of measurements with sufficient resolution to detect, classify and localize cars, pedestrians, bicyclists, curbs, and even small animals around the vehicle. Due to the three-dimensional nature of the data, lidar systems can detect and track a human-sized or larger object in the streetscape even before classifying it as a motorcyclist, bicyclist, or pedestrian. As the distance between the vehicle and object decreases, the increased number of points on the object make classification based on shape possible, although vision sensors may also play a role in classification. Lidar sensors are unaffected by darkness and glare and suffer relatively minor degradation in rain, snow, and fog. Early-generation automotive lidar units use a spinning platform to sweep dozens of individual beams across the environment. Next-generation units, however, use solid-state electronics to sweep the laser beams, allowing the sensor to be much smaller and less expensive – as low as $100 per device when manufactured in quantity.

Vehicle to Vehicle (V2V) Communication

Vehicle to Vehicle (V2V) and Infrastructure to Vehicle (I2V) communication is an emerging technology that will allow vehicles to receive traffic data beyond what their sensors can measure directly. This may be of value to pedestrians and cyclists in situations where sight-line obstructions prevent them from being seen until immediately before a conflict. For example, when a pedestrian crosses the street in front of a stopped vehicle, the stopped vehicle may block the view between the pedestrian and another vehicle approaching an adjacent same-direction lane. This common crash scenario, known as multiple threat, could be mitigated if the second vehicle were to receive transmitted information about the pedestrian’s position. Some technology innovators have proposed that user presence information be transmitted by pedestrians and bicyclists themselves via mobile devices or other transponders, a premise that has generated backlash from bike/ped advocates who oppose placing instrumentation burdens on vulnerable road users. But such user presence information can be transmitted by the stopped vehicle, which can sense the pedestrian, or by the crosswalk infrastructure in those situations where sight lines may be compromised. Lastly, geometric engineering improvements such as road diets that improve pedestrian visibility with human drivers (and remove multiple threat conflicts) will also improve safety with autonomous vehicles. As long as pedestrians and bicyclists travel where they can be seen, fully autonomous vehicles will likely outperform human drivers at yielding.

Collision Avoidance Systems

About half of new car models sold at the time of this writing are available with sensor-based collision avoidance systems; manufacturers have promised that virtually all new cars will feature automatic emergency braking (AEB) systems by 2022. The combination of forward collision warning (FCW) features (which alert a driver to take action when a collision appears possible) with first-generation AEB has shown dramatic reductions in rear-end collision rates (up to 80%) and pedestrian collision rates (up to 50%) when compared to similar models without the technology.

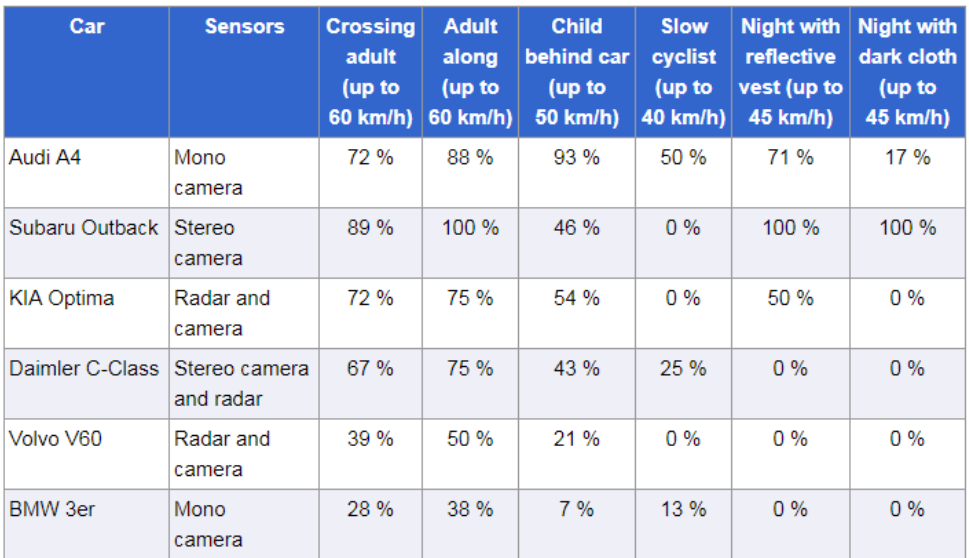

All collision avoidance systems are not created equal. Some AEB systems are designed to detect only cars, and dramatic differences in performance are found among the products designed to detect pedestrians. The European New Car Assessment Program (Euro NCAP) began performing standardized tests for pedestrian AEB systems in 2016. The tests involve pulling crash dummies across the vehicle path; the dummy arms and legs articulate in order to provide an authentic return signal for micro-doppler analysis. The most challenging test involves simulation of a child running out from behind a parked vehicle. As speeds increase, every vehicle fails the parked vehicle test. No matter how fast a computer can react to an emergency, the laws of physics dictate a car’s minimum braking distance.

The test results, published online, show how fast each vehicle can travel and still have the AEB system stop effectively for a pedestrian walking across the roadway. (Note that Euro NCAP does not have the resources to test every car model.) I compiled the 2017 model test results into one table, shown below, to compare the vehicles directly. Tested performance varies even among implementations using the same sensor types. Most use some combination of cameras and radar, since lidar has historically been very expensive. Some of the systems performed well at relatively high speeds, but the takeaway is that even with autonomous braking, pedestrians crossing the road are safer if vehicle speeds are limited. The worst pedestrian AEB system tested for 2017 was the Ford Mustang, which never completely avoided contact with the pedestrian dummy. It is therefore unsurprising that Ford decided to hype the pedestrian AEB technology improvements that they added for 2018.

| Max Stopping

Speeds in KPH |

Year |

Adult

Farside |

Adult

Nearside 25% |

Adult

Nearside 75% |

Child Run from Parking |

| Volvo S90 |

2017 |

60 | 60 | 60 | 40 |

|

Volvo V90 |

2017 | 60 | 60 | 60 |

40 |

| Mazda CX-5 |

2017 |

50 | 45 | 60 |

40 |

| Audi Q5 |

2017 |

60 | 40 | 50 |

40 |

| Range Rover Velar |

2017 |

40 | 40 | 40 |

45 |

| Alfa Romeo Stelvio |

2017 |

40 | 40 | 50 |

35 |

| Seat Ibiza |

2017 |

45 | 40 | 45 |

35 |

|

BMW 5 |

2017 | 45 | 45 | 40 |

35 |

| Toyota C-HR |

2017 |

45 | 45 | 55 |

20 |

| Land Rover Discovery |

2017 |

40 | 40 | 40 |

40 |

| Opel Ampera E |

2017 |

35 | 40 | 45 |

35 |

| Opel Vauxhall Insignia |

2017 |

40 | 35 | 40 |

35 |

| Nissan Micra |

2017 |

30 | 40 | 50 |

30 |

|

VW Arteon |

2017 | 45 | 35 | 30 |

35 |

| Kia Rio |

2017 |

20 | 25 | 45 |

35 |

|

Honda Civic |

2017 | 20 | 35 | 40 |

30 |

| Skoda Kodiaq |

2017 |

45 | 25 | 25 |

25 |

| Ford Mustang |

2017 |

0 | 0 | 0 |

0 |

In 2018, Euro NCAP will begin standardized testing of AEB performance for bicyclists. The test procedure includes both crossing and longitudinal (same direction overtaking) paths of relevance to both urban and rural cyclists. In 2017, the German ADAC Automobilists’ Club performed their own testing with crossing bicyclist and pedestrian movements under varied lighting. They found similar disparities between products, and while some systems such as Subaru Eyesight performed remarkably well at pedestrian detection even in low light, the crossing bicyclist tests performed poorly.

Testing of bicyclist and pedestrian AEB systems in Unites States has lagged behind Europe. The NHTSA plans [correction: has proposed, and may delay until 2019 according to conflicting reports] to begin testing pedestrian AEB systems in 2018, and has not given any timeline for testing of bicycle detection.

Limitations of AEB Systems

If an AEB system were to perform hard braking at the wrong time, such as when merging in front of another vehicle, it might cause a crash rather than avoid one. “False braking events” can also frustrate vehicle owners and reduce public acceptance of AEB technology. For these reasons, many auto manufacturers program AEB systems to be very conservative, and consequently they do not always brake in time to avoid a crash. For example, a Tesla spokesperson explained that on their vehicle, “AEB does not engage when an alternative collision avoidance strategy (e.g., driver steering) remains viable.” Some have speculated that this policy may have contributed to the recent rear-ending of a stopped fire truck by a Tesla whose owner claims that the Autopilot was engaged.

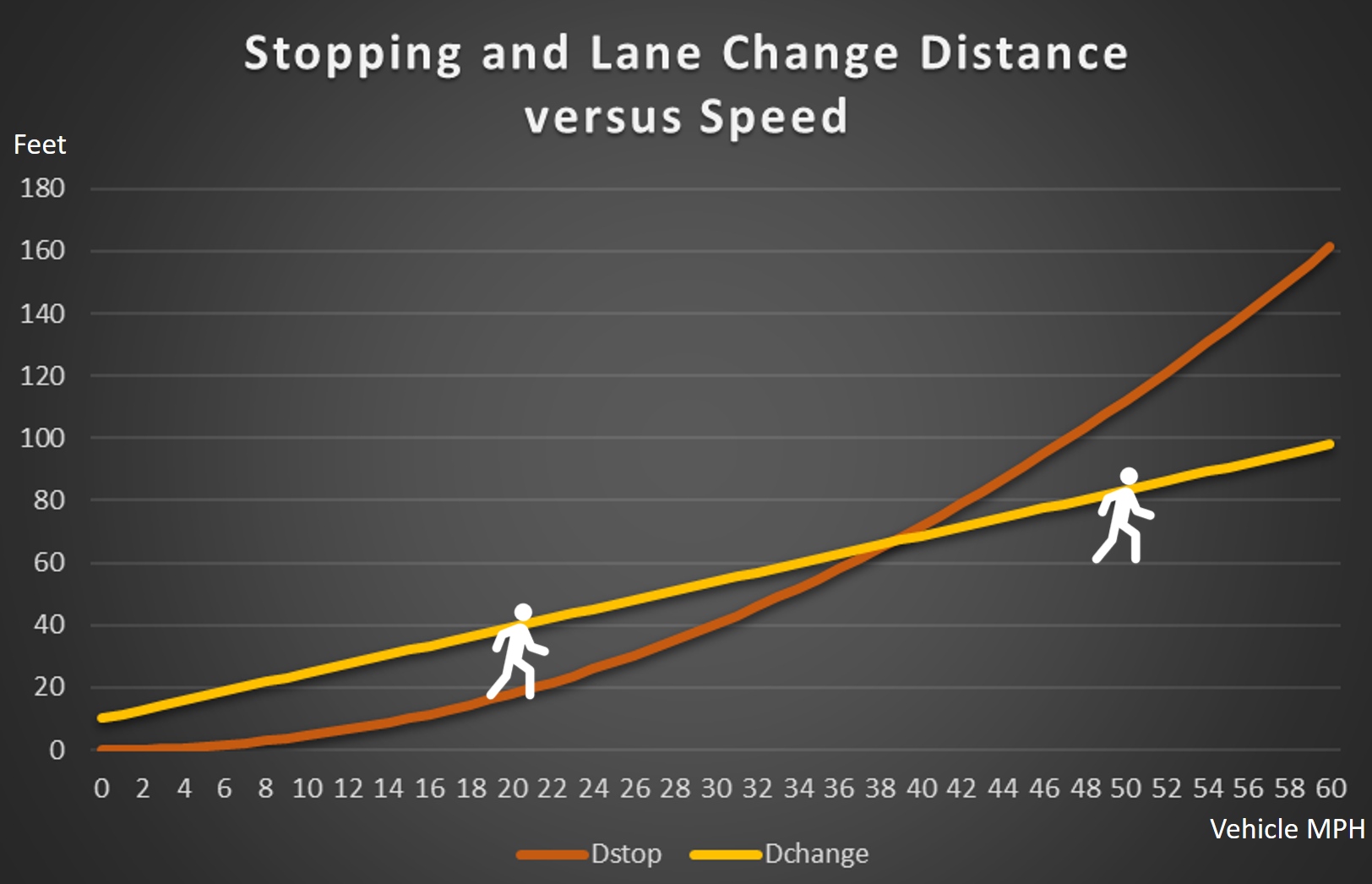

If an AEB system waits until the last second for a driver to swerve into the next lane, it may be too late to stop. AEB systems perform worse as vehicles travel faster, because stopping distance increases in proportion to the square of vehicle speed. At low speeds, there is still room to stop for a pedestrian or bicyclist after a driver fails to steer away, but at higher speeds, waiting for the driver to swerve forfeits the required stopping distance. A typical AEB system is likely to detect and brake for a bicyclist riding in the center of the lane on a low speed city street, but may fail to slow in time for a bicyclist riding on the edge of a narrow, high speed rural highway – which unfortunately is the most common scenario for car-overtaking-bicycle crashes. In contrast, a fully autonomous vehicle will not wait for a driver to respond, and can gradually slow down for slower traffic or make a safe lane change long before an emergency exists.

Semi-Autonomous Vehicles

Although fully autonomous “driverless” vehicles are still under research and development, a variety of semi-autonomous driver-assistance products such as Tesla Autopilot are already being used by consumers today. The differences between these products pose challenges to regulators and can be confusing to users. To help distinguish various types of self-driving systems, regulators often refer to five different levels of autonomy as defined by SAE International.

The main differences between autonomy levels are who (or what) is responsible for monitoring the road environment and when they are expected to intervene should the autonomous system be incapable of handling the situation. At autonomy levels 0 and 1, the human driver must perform at least part of the steering or speed control operation, and are therefore (one would expect) continuously engaged and monitoring the environment. At autonomy levels 4 and 5, the computer does all of the driving and the human occupant is never expected to do participate.

Levels 2 and 3, often called semi-autonomous driving, are where things get complicated. With a level 2 system, such as Tesla Autopilot, the human driver is expected to continuously monitor the driving environment and take over dynamic control instantly when they realize something is happening outside of the system’s capabilities. Exactly how consumers will know which traffic situations a semi-autonomous car can handle and which it cannot is unclear. Can a semi-autonomous system handle intersections? Pedestrians in crosswalks? Bicyclists in the travel lane? Furthermore, the expectation that a human occupant will stay effectively focused on the road when a level 2 system is driving is widely derided; research has shown that people quickly zone out under such conditions and are slow to respond. With a level 3 system, the human driver is not expected to monitor the road but is expected to take over driving when the system asks them to do so.

A fatal crash involving a Tesla Autopilot system running into the side of a turning truck in May 2016 attracted an investigation from the National Transportation Safety Board. In their final report the NTSB concluded that it was easy for a human driver to use the system under conditions it was not capable of handling.

“The owner’s manual stated that Autopilot should only be used in preferred road environments, but Tesla did not automatically restrict the availability of Autopilot based on road classification. The driver of the Tesla involved in the Williston crash was able to activate Autopilot on portions of SR-24, which is not a divided road, and on both SR-24 and US-27A, which are not limited-access roadways. Simply stated, the driver could use the Autopilot system on roads for which it was not intended to be used.”

The Tesla Autopilot system in question used a monocular forward-looking camera and radar. According to Tesla, the camera system mistook the side of the truck for sky, and the radar system, unable to resolve the precise height of the truck, dismissed it as a billboard. Tesla has been criticized for omitting lidar from their vehicle and relying entirely on radar and camera sensors to provide what Tesla claims will eventually be full autonomy. But the Tesla crash also illustrates the fragility of requiring object classification before inferring obstacle detection and localization. A stereo camera system with depth perception, for example, would likely detect an imminent collision with a crossing obstacle in the roadway even if it failed to classify the object in the scene as a truck.

Operational Design Domain (ODD)

The use, or misuse, of semi-autonomous systems under conditions beyond their capability brings us to the concept of Operational Design Domain, or ODD. Again, from the NTSB report:

“The ODD refers to the conditions in which the automated system is intended to operate. Examples of such conditions include roadway type, geographic location, clear roadway markings, weather condition, speed range, lighting condition, and other manufacturer-defined system performance criteria or constraints.”

…

“The Insurance Institute for Highway Safety recognized the importance of ODDs in its comments on the AV Policy, as follows: “Driving automation systems should self-enforce their use within the operational design domain rather than relying on users to do so” (Kidd 2016).” “

If a semi-autonomous driving system is incapable of handling intersections, pedestrians, or bicyclists, a self-enforcing feature may use map data – aka geofencing – to prevent it from being activated outside of fully controlled access highways, i.e. freeways. This is how the Cadillac Super Cruise system works: The driver can only activate the system if the GPS indicates the car is on a freeway. Super Cruise also features a driver-facing camera that warns the driver if they look away from the road for too long. If the driver does not return their attention to the road after repeated alerts, Super Cruise will eventually slow the vehicle to a stop. Whether these precautions will be safe enough in practice (and whether Tesla and other manufacturers will follow suit) remains to be seen.

Fully Autonomous Vehicles

Two autonomous car companies, Google’s Waymo and GM’s Cruise Automation, are currently well ahead of other research and development groups when it comes to testing and experience driving safely around bicyclists and pedestrians in the real world. Cruise automation performs much of their autonomous car testing in downtown San Francisco for the explicit purpose of encountering complicated situations including lots of bicyclists. Says Kyle Vogt, founder of Cruise Automation: “Our vehicles encounter cyclists 16x as often in SF than in suburbs, so we’ve invested considerably in the behavior of our vehicles when a cyclist is nearby.”

Google cars regularly demonstrate their ability to detect and brake for bicyclists and pedestrians; the video below highlights a surprise encounter with a wrong-way bicyclist.

Google Car Detects Wrong-Way Bicyclist (at 24:58)

A later section of the same video (at 26:15) shows the Google car encountering a woman in a wheelchair chasing a duck with a broom.

The behavior of bicyclists affects the reliability with which autonomous cars will be able to accommodate their movements. Olaf Op den Camp, who led the design of Europe’s cyclist-AEB benchmarking test, explained to IEEE Spectrum that some bicyclists’ movements are hard to predict. Jana Košecká, a computer scientist at George Mason University in Fairfax, Virginia, agrees that bicyclists are “much less predictable than cars because it’s easier for them to make sudden turns or jump out of nowhere.” To an autonomous vehicle developer, the unpredictable movements of some bicyclists who make up their own traffic rules are cause for alarm. Vehicle manufacturers want to minimize risk of collision regardless of fault. But to responsible bicyclists, it may be empowering to know that riding predictably and lawfully makes a difference.

When encountering a situation that seems uncertain, it’s best for an autonomous vehicle to err on the side of caution and stop, even if it doesn’t know how or when start again, as in the wheelchair video. This condition, dubbed “Frozen Robot Syndrome,” may hinder the adoption of autonomous cars and/or encourage incorporation of remote teleoperation capability as a fallback, but is an essential failsafe. A widely reported traffic negotiation “failure” between a Google car and a track-standing bicyclist at a four way stop was essentially a case of frozen robot syndrome, where the Google car attempted to yield every time the bicyclist wobbled. A human driver would have given up and gone ahead of the bicyclist, but the Google car’s deference posed no danger to the bicyclist.

Waymo and Cruise test their autonomous driving algorithms extensively – and not only in real-world cities, but also in virtual reality simulations where complex, worst-case scenarios can be repeated again and again without putting people at risk. The main virtue that critics might find lacking in these test procedures is public transparency. Car companies often hold their internal test results close to the chest, making it hard to tell just how reliable their products are. When autonomous cars are tested on public streets in California, the DMV requires companies to report annually the number of disengagement events – the number of times when a human must intervene and take over driving. But without knowing the road conditions and traffic challenges they are being tested against, it is difficult for outsiders to make apples to apples comparisons. Third party testing may be the only way for the public to obtain a clear and unbiased measurement of autonomous vehicle safety.

Human/Robot Interactions

Pedestrian communication with autonomous vehicles may differ from communication with human drivers, who often wave pedestrians across the street. Researchers at Duke University’s Humans and Autonomy Lab have studied the use of electronic displays to indicate a vehicle’s intentions to pedestrians. Such displays add a degree of friendliness to an interaction that is already impersonal today when human drivers are hidden by tinted windows. Experienced pedestrians and cyclists learn to read a vehicle’s “body language” such as acceleration/deceleration rate, lane position, and steering angle; these cues will still apply to autonomous vehicles. Last but not least, there is the conventional wisdom of making eye contact with a stopped driver to make sure they see you before crossing in front of them. Why would a pedestrian be concerned about this with an autonomous car if they know that an autonomous car always sees them? Adam Millard-Ball, professor of Environmental Studies at USC Santa Cruz, writes that as pedestrians and bicyclists become more confident that autonomous vehicles will yield to them more reliably than human drivers do, walking and cycling may come to dominate many city streets, resulting in changes in transportation habits and urban form.

Conclusions for Bike/Ped Safety Advocates

Fully autonomous vehicles offer the potential to greatly improve public safety, including the safety of pedestrians and cyclists. However, some of the semi-autonomous self-driving products entering the market may pose increased risks to vulnerable users due to insufficient sensing capabilities, especially if human drivers lapse in attention after activating them on surface streets. Government regulation may be required to restrict such semi-autonomous systems to operational design domains where they cannot harm pedestrians and bicyclists. Geo-fencing to fully controlled access highways is one feasible way to accomplish this self-enforcement.

A lack of transparency in testing has made the safety of autonomous driving systems and most automatic emergency braking systems a mystery to the public. The capabilities and performance of automated systems vary widely between manufacturers and models. Standardized testing, especially by a third party, is required to keep unsafe implementations of these technologies off of public roadways and to compel manufacturers to improve under-performing systems. This may require a significant increase of funding for NHTSA and Euro NCAP testing programs.

Engineering and enforcement changes that produce slower speeds, shorter crossing distances, and better sight lines improve public safety with automated vehicles just as they do with human drivers. Although automated systems may react faster than human drivers in an emergency, automation doesn’t change the laws of physics that increase braking distances and place pedestrians and bicyclists at much higher risk when vehicle speeds increase. Reaching Vision Zero – the goal of eliminating traffic deaths – will require implementing safe vehicle speeds and effective crossing facilities in areas populated with human beings.

Steven Goodridge earned his Ph.D. in electrical engineering at North Carolina State University, where his research involved fully autonomous mobile robots, collision avoidance systems, computer vision, and sensor fusion. Steven currently develops advanced sensor systems for the law enforcement and defense community at Signalscape, Inc. in Cary, NC. He is a certified Cycling Savvy instructor and Master League Cycling Instructor.

Scott McKinley says

Great article – thanks. As a cyclist who has had a femur shattered in a collision with a car, I have some real trepidation about the viability of autonomous vehicles.

Have smaller animals ever entered into the discussion about the capabilities of these vehicles? Cats, dogs less than 20 lbs, possums, and squirrels come to mind. It would be a shame if the number of road kills were to spike because of autonomous technology in cars.